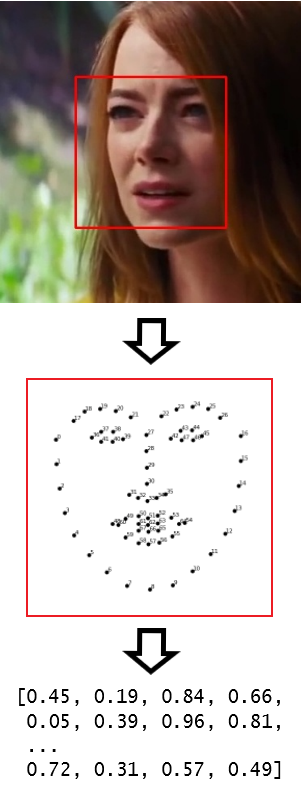

1. 그림에서 얼굴이 있는 영역을 알아낸다(face location)

2. 얼굴 영역에서 눈, 코, 입 등 68개의 주요 좌표를 추출한다.

3. 68개의 좌표를 128개의 숫자로 표현한다.(face encoding)

face_classifier.py

#!/usr/bin/env python3

from person_db import Person

from person_db import Face

from person_db import PersonDB

import face_recognition

import numpy as np

from datetime import datetime

import cv2

class FaceClassifier():

def __init__(self, threshold, ratio):

self.similarity_threshold = threshold

self.ratio = ratio

def get_face_image(self, frame, box):

img_height, img_width = frame.shape[:2]

(box_top, box_right, box_bottom, box_left) = box

box_width = box_right - box_left

box_height = box_bottom - box_top

crop_top = max(box_top - box_height, 0)

pad_top = -min(box_top - box_height, 0)

crop_bottom = min(box_bottom + box_height, img_height - 1)

pad_bottom = max(box_bottom + box_height - img_height, 0)

crop_left = max(box_left - box_width, 0)

pad_left = -min(box_left - box_width, 0)

crop_right = min(box_right + box_width, img_width - 1)

pad_right = max(box_right + box_width - img_width, 0)

face_image = frame[crop_top:crop_bottom, crop_left:crop_right]

if (pad_top == 0 and pad_bottom == 0):

if (pad_left == 0 and pad_right == 0):

return face_image

padded = cv2.copyMakeBorder(face_image, pad_top, pad_bottom,

pad_left, pad_right, cv2.BORDER_CONSTANT)

return padded

# return list of dlib.rectangle

def locate_faces(self, frame):

#start_time = time.time()

if self.ratio == 1.0:

rgb = frame[:, :, ::-1]

else:

small_frame = cv2.resize(frame, (0, 0), fx=self.ratio, fy=self.ratio)

rgb = small_frame[:, :, ::-1]

boxes = face_recognition.face_locations(rgb)

#elapsed_time = time.time() - start_time

#print("locate_faces takes %.3f seconds" % elapsed_time)

if self.ratio == 1.0:

return boxes

boxes_org_size = []

for box in boxes:

(top, right, bottom, left) = box

left = int(left / ratio)

right = int(right / ratio)

top = int(top / ratio)

bottom = int(bottom / ratio)

box_org_size = (top, right, bottom, left)

boxes_org_size.append(box_org_size)

return boxes_org_size

def detect_faces(self, frame):

boxes = self.locate_faces(frame)

if len(boxes) == 0:

return []

# faces found

faces = []

now = datetime.now()

str_ms = now.strftime('%Y%m%d_%H%M%S.%f')[:-3] + '-'

encodings = face_recognition.face_encodings(frame, boxes)

for i, box in enumerate(boxes):

face_image = self.get_face_image(frame, box)

face = Face(str_ms + str(i) + ".png", face_image, encodings[i])

face.location = box

faces.append(face)

return faces

def compare_with_known_persons(self, face, persons):

if len(persons) == 0:

return None

# see if the face is a match for the faces of known person

encodings = [person.encoding for person in persons]

distances = face_recognition.face_distance(encodings, face.encoding)

index = np.argmin(distances)

min_value = distances[index]

if min_value < self.similarity_threshold:

# face of known person

persons[index].add_face(face)

# re-calculate encoding

persons[index].calculate_average_encoding()

face.name = persons[index].name

return persons[index]

def compare_with_unknown_faces(self, face, unknown_faces):

if len(unknown_faces) == 0:

# this is the first face

unknown_faces.append(face)

face.name = "unknown"

return

encodings = [face.encoding for face in unknown_faces]

distances = face_recognition.face_distance(encodings, face.encoding)

index = np.argmin(distances)

min_value = distances[index]

if min_value < self.similarity_threshold:

# two faces are similar - create new person with two faces

person = Person()

newly_known_face = unknown_faces.pop(index)

person.add_face(newly_known_face)

person.add_face(face)

person.calculate_average_encoding()

face.name = person.name

newly_known_face.name = person.name

return person

else:

# unknown face

unknown_faces.append(face)

face.name = "unknown"

return None

def draw_name(self, frame, face):

color = (0, 0, 255)

thickness = 2

(top, right, bottom, left) = face.location

# draw box

width = 20

if width > (right - left) // 3:

width = (right - left) // 3

height = 20

if height > (bottom - top) // 3:

height = (bottom - top) // 3

cv2.line(frame, (left, top), (left+width, top), color, thickness)

cv2.line(frame, (right, top), (right-width, top), color, thickness)

cv2.line(frame, (left, bottom), (left+width, bottom), color, thickness)

cv2.line(frame, (right, bottom), (right-width, bottom), color, thickness)

cv2.line(frame, (left, top), (left, top+height), color, thickness)

cv2.line(frame, (right, top), (right, top+height), color, thickness)

cv2.line(frame, (left, bottom), (left, bottom-height), color, thickness)

cv2.line(frame, (right, bottom), (right, bottom-height), color, thickness)

# draw name

#cv2.rectangle(frame, (left, bottom + 35), (right, bottom), (0, 0, 255), cv2.FILLED)

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, face.name, (left + 6, bottom + 30), font, 1.0,

(255, 255, 255), 1)

if __name__ == '__main__':

import argparse

import signal

import time

import os

ap = argparse.ArgumentParser()

ap.add_argument("inputfile",

help="video file to detect or '0' to detect from web cam")

ap.add_argument("-t", "--threshold", default=0.44, type=float,

help="threshold of the similarity (default=0.44)")

ap.add_argument("-S", "--seconds", default=1, type=float,

help="seconds between capture")

ap.add_argument("-s", "--stop", default=0, type=int,

help="stop detecting after # seconds")

ap.add_argument("-k", "--skip", default=0, type=int,

help="skip detecting for # seconds from the start")

ap.add_argument("-d", "--display", action='store_true',

help="display the frame in real time")

ap.add_argument("-c", "--capture", type=str,

help="save the frames with face in the CAPTURE directory")

ap.add_argument("-r", "--resize-ratio", default=1.0, type=float,

help="resize the frame to process (less time, less accuracy)")

args = ap.parse_args()

src_file = args.inputfile

if src_file == "0":

src_file = 0

src = cv2.VideoCapture(src_file)

if not src.isOpened():

print("cannot open inputfile", src_file)

exit(1)

frame_width = src.get(cv2.CAP_PROP_FRAME_WIDTH)

frame_height = src.get(cv2.CAP_PROP_FRAME_HEIGHT)

frame_rate = src.get(5)

frames_between_capture = int(round(frame_rate * args.seconds))

print("source", args.inputfile)

print("original: %dx%d, %f frame/sec" % (src.get(3), src.get(4), frame_rate))

ratio = float(args.resize_ratio)

if ratio != 1.0:

s = "RESIZE_RATIO: " + args.resize_ratio

s += " -> %dx%d" % (int(src.get(3) * ratio), int(src.get(4) * ratio))

print(s)

print("process every %d frame" % frames_between_capture)

print("similarity shreshold:", args.threshold)

if args.stop > 0:

print("Detecting will be stopped after %d second." % args.stop)

# load person DB

result_dir = "result"

pdb = PersonDB()

pdb.load_db(result_dir)

pdb.print_persons()

# prepare capture directory

num_capture = 0

if args.capture:

print("Captured frames are saved in '%s' directory." % args.capture)

if not os.path.isdir(args.capture):

os.mkdir(args.capture)

# set SIGINT (^C) handler

def signal_handler(sig, frame):

global running

running = False

prev_handler = signal.signal(signal.SIGINT, signal_handler)

if args.display:

print("Press q to stop detecting...")

else:

print("Press ^C to stop detecting...")

fc = FaceClassifier(args.threshold, ratio)

frame_id = 0

running = True

total_start_time = time.time()

while running:

ret, frame = src.read()

if frame is None:

break

frame_id += 1

if frame_id % frames_between_capture != 0:

continue

seconds = round(frame_id / frame_rate, 3)

if args.stop > 0 and seconds > args.stop:

break

if seconds < args.skip:

continue

start_time = time.time()

# this is core

faces = fc.detect_faces(frame)

for face in faces:

person = fc.compare_with_known_persons(face, pdb.persons)

if person:

continue

person = fc.compare_with_unknown_faces(face, pdb.unknown.faces)

if person:

pdb.persons.append(person)

if args.display or args.capture:

for face in faces:

fc.draw_name(frame, face)

if args.capture and len(faces) > 0:

now = datetime.now()

filename = now.strftime('%Y%m%d_%H%M%S.%f')[:-3] + '.png'

pathname = os.path.join(args.capture, filename)

cv2.imwrite(pathname, frame)

num_capture += 1

if args.display:

cv2.imshow("Frame", frame)

# imshow always works with waitKey

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

running = False

elapsed_time = time.time() - start_time

s = "\rframe " + str(frame_id)

s += " @ time %.3f" % seconds

s += " takes %.3f second" % elapsed_time

s += ", %d new faces" % len(faces)

s += " -> " + repr(pdb)

if num_capture > 0:

s += ", %d captures" % num_capture

print(s, end=" ")

# restore SIGINT (^C) handler

signal.signal(signal.SIGINT, prev_handler)

running = False

src.release()

total_elapsed_time = time.time() - total_start_time

print()

print("total elapsed time: %.3f second" % total_elapsed_time)

pdb.save_db(result_dir)

pdb.print_persons()

person_db.py

import os

import cv2

import imutils

import shutil

import face_recognition

import numpy as np

import time

import pickle

class Face():

key = "face_encoding"

def __init__(self, filename, image, face_encoding):

self.filename = filename

self.image = image

self.encoding = face_encoding

def save(self, base_dir):

# save image

pathname = os.path.join(base_dir, self.filename)

cv2.imwrite(pathname, self.image)

@classmethod

def get_encoding(cls, image):

rgb = image[:, :, ::-1]

boxes = face_recognition.face_locations(rgb, model="hog")

if not boxes:

height, width, channels = image.shape

top = int(height/3)

bottom = int(top*2)

left = int(width/3)

right = int(left*2)

box = (top, right, bottom, left)

else:

box = boxes[0]

return face_recognition.face_encodings(image, [box])[0]

class Person():

_last_id = 0

def __init__(self, name=None):

if name is None:

Person._last_id += 1

self.name = "person_%02d" % Person._last_id

else:

self.name = name

if name.startswith("person_") and name[7:].isdigit():

id = int(name[7:])

if id > Person._last_id:

Person._last_id = id

self.encoding = None

self.faces = []

def add_face(self, face):

# add face

self.faces.append(face)

def calculate_average_encoding(self):

if len(self.faces) is 0:

self.encoding = None

else:

encodings = [face.encoding for face in self.faces]

self.encoding = np.average(encodings, axis=0)

def distance_statistics(self):

encodings = [face.encoding for face in self.faces]

distances = face_recognition.face_distance(encodings, self.encoding)

return min(distances), np.mean(distances), max(distances)

def save_faces(self, base_dir):

pathname = os.path.join(base_dir, self.name)

try:

shutil.rmtree(pathname)

except OSError as e:

pass

os.mkdir(pathname)

for face in self.faces:

face.save(pathname)

def save_montages(self, base_dir):

images = [face.image for face in self.faces]

montages = imutils.build_montages(images, (128, 128), (6, 2))

for i, montage in enumerate(montages):

filename = "montage." + self.name + ("-%02d.png" % i)

pathname = os.path.join(base_dir, filename)

cv2.imwrite(pathname, montage)

@classmethod

def load(cls, pathname, face_encodings):

basename = os.path.basename(pathname)

person = Person(basename)

for face_filename in os.listdir(pathname):

face_pathname = os.path.join(pathname, face_filename)

image = cv2.imread(face_pathname)

if image.size == 0:

continue

if face_filename in face_encodings:

face_encoding = face_encodings[face_filename]

else:

print(pathname, face_filename, "calculate encoding")

face_encoding = Face.get_encoding(image)

if face_encoding is None:

print(pathname, face_filename, "drop face")

else:

face = Face(face_filename, image, face_encoding)

person.faces.append(face)

print(person.name, "has", len(person.faces), "faces")

person.calculate_average_encoding()

return person

class PersonDB():

def __init__(self):

self.persons = []

self.unknown_dir = "unknowns"

self.encoding_file = "face_encodings"

self.unknown = Person(self.unknown_dir)

def load_db(self, dir_name):

if not os.path.isdir(dir_name):

return

print("Start loading persons in the directory '%s'" % dir_name)

start_time = time.time()

# read face_encodings

pathname = os.path.join(dir_name, self.encoding_file)

try:

with open(pathname, "rb") as f:

face_encodings = pickle.load(f)

print(len(face_encodings), "face_encodings in", pathname)

except:

face_encodings = {}

# read persons

for entry in os.scandir(dir_name):

if entry.is_dir(follow_symlinks=False):

pathname = os.path.join(dir_name, entry.name)

person = Person.load(pathname, face_encodings)

if len(person.faces) == 0:

continue

if entry.name == self.unknown_dir:

self.unknown = person

else:

self.persons.append(person)

elapsed_time = time.time() - start_time

print("Loading persons finished in %.3f sec." % elapsed_time)

def save_encodings(self, dir_name):

face_encodings = {}

for person in self.persons:

for face in person.faces:

face_encodings[face.filename] = face.encoding

for face in self.unknown.faces:

face_encodings[face.filename] = face.encoding

pathname = os.path.join(dir_name, self.encoding_file)

with open(pathname, "wb") as f:

pickle.dump(face_encodings, f)

print(pathname, "saved")

def save_montages(self, dir_name):

for person in self.persons:

person.save_montages(dir_name)

self.unknown.save_montages(dir_name)

print("montages saved")

def save_db(self, dir_name):

print("Start saving persons in the directory '%s'" % dir_name)

start_time = time.time()

try:

shutil.rmtree(dir_name)

except OSError as e:

pass

os.mkdir(dir_name)

for person in self.persons:

person.save_faces(dir_name)

self.unknown.save_faces(dir_name)

self.save_montages(dir_name)

self.save_encodings(dir_name)

elapsed_time = time.time() - start_time

print("Saving persons finished in %.3f sec." % elapsed_time)

def __repr__(self):

s = "%d persons" % len(self.persons)

num_known_faces = sum(len(person.faces) for person in self.persons)

s += ", %d known faces" % num_known_faces

s += ", %d unknown faces" % len(self.unknown.faces)

return s

def print_persons(self):

print(self)

persons = sorted(self.persons, key=lambda obj : obj.name)

encodings = [person.encoding for person in persons]

for person in persons:

distances = face_recognition.face_distance(encodings, person.encoding)

s = "{:10} [ ".format(person.name)

s += " ".join(["{:5.3f}".format(x) for x in distances])

mn, av, mx = person.distance_statistics()

s += " ] %.3f, %.3f, %.3f" % (mn, av, mx)

s += ", %d faces" % len(person.faces)

print(s)

if __name__ == '__main__':

dir_name = "result"

pdb = PersonDB()

pdb.load_db(dir_name)

pdb.print_persons()

pdb.save_montages(dir_name)

pdb.save_encodings(dir_name)

$python face_classifier.py -i TWICE 'Alcohol-Free' M_V-XA2YEHn-A8Q.webm -t 0.4 -c -S 0.5

$python face_classifier.py TWICE 'Alcohol-Free' M_V-XA2YEHn-A8Q.webm -d

usage: face_classifier.py [-h] [-t THRESHOLD] [-S SECONDS] [-s STOP] [-k SKIP]

[-d] [-c CAPTURE]

inputfile

positional arguments:

inputfile video file to detect or '0' to detect from web cam

optional arguments:

-h, --help show this help message and exit

-t THRESHOLD, --threshold THRESHOLD

threshold of the similarity (default=0.44)

-S SECONDS, --seconds SECONDS

seconds between capture

-s STOP, --stop STOP stop detecting after # seconds

-k SKIP, --skip SKIP skip detecting for # seconds from the start

-d, --display display the frame in real time

-c CAPTURE, --capture CAPTURE

save the frames with face in the CAPTURE directory